Attempts to measure diversity of CodeLM Generations

Introduction

Code Language Models are used in the context of code completion and chat interfaces day in day out by software developers to write and contribute to code bases. Programming Languages is a formal in nature with a fixed, strict set of rules. This pushes us to study quanitifying and measuring how diverse are the generations generated by these models in various settings, more importantly it makes us wonder what diversity is in the context of code. This post aims to explore and understand the diversity of generations of Code LMs. And discover various shortcomings in measuring diversity of generations of Code LMs in a controlled settings. In the below post I use HumanEval benchmark which is a benchmark evaluating function level code completion by CodeLMs for functional correctness measuring the accuracy, passing the given test cases within first k out of n samples. This post widely tries to explore how to evaluate diversity in the context of code and shortcomings of the current methods to measure diversity.

Semantic and Syntactic Diversity

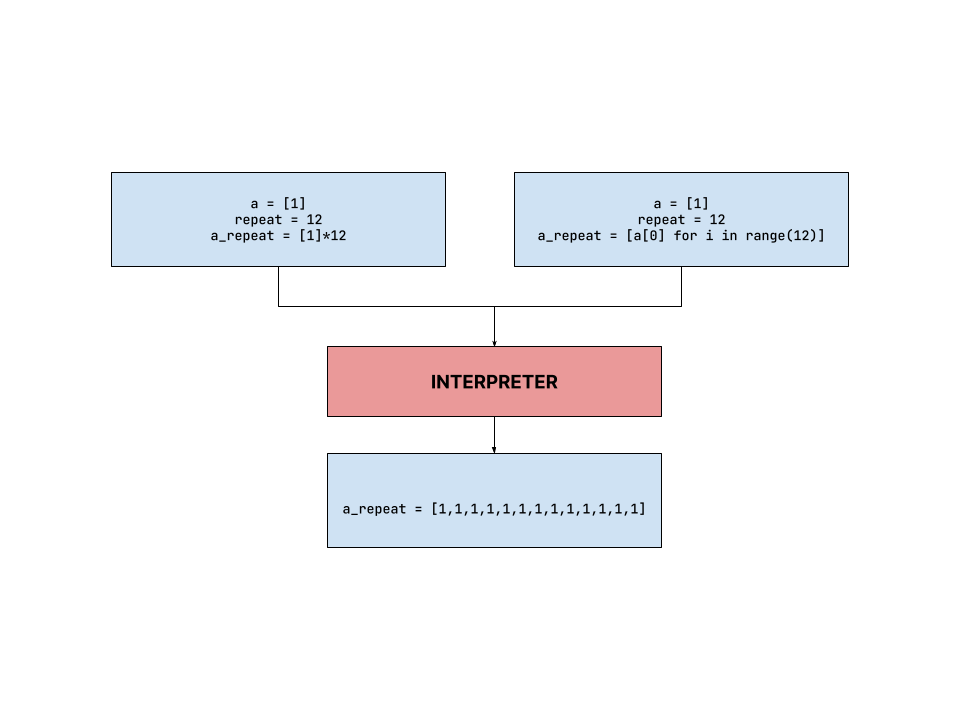

The general notion of syntax and semantics in the context of programming languages is slightly different from that of natural language. In the context of programming languages syntax refers to the structure of the code, the rules that govern the structure of the code. Semantics refers to the intention of a given piece of code. Here is a small example of semantically similar code snippets which can be expressed varied syntax which are functionally equivalent.

The formal structure of the code allows for a wide range of syntactic variations. T Diversity of a CodeLM in the context of Code can be thus formally defined as functionally equivalent, semantically similar generations. While the idea of diversity might be very small in small snippets of code, more abstract real world software

HumanEval Benchmark

HumanEval benchmark introduced in the Codex is a popular benchmark widely used to measure the performance of CodeLMs. It is a benchmark evaluating function level code completion by CodeLMs for functional correctness measuring the accuracy, passing the given test cases within first k out of n samples.

Diversity Metrics

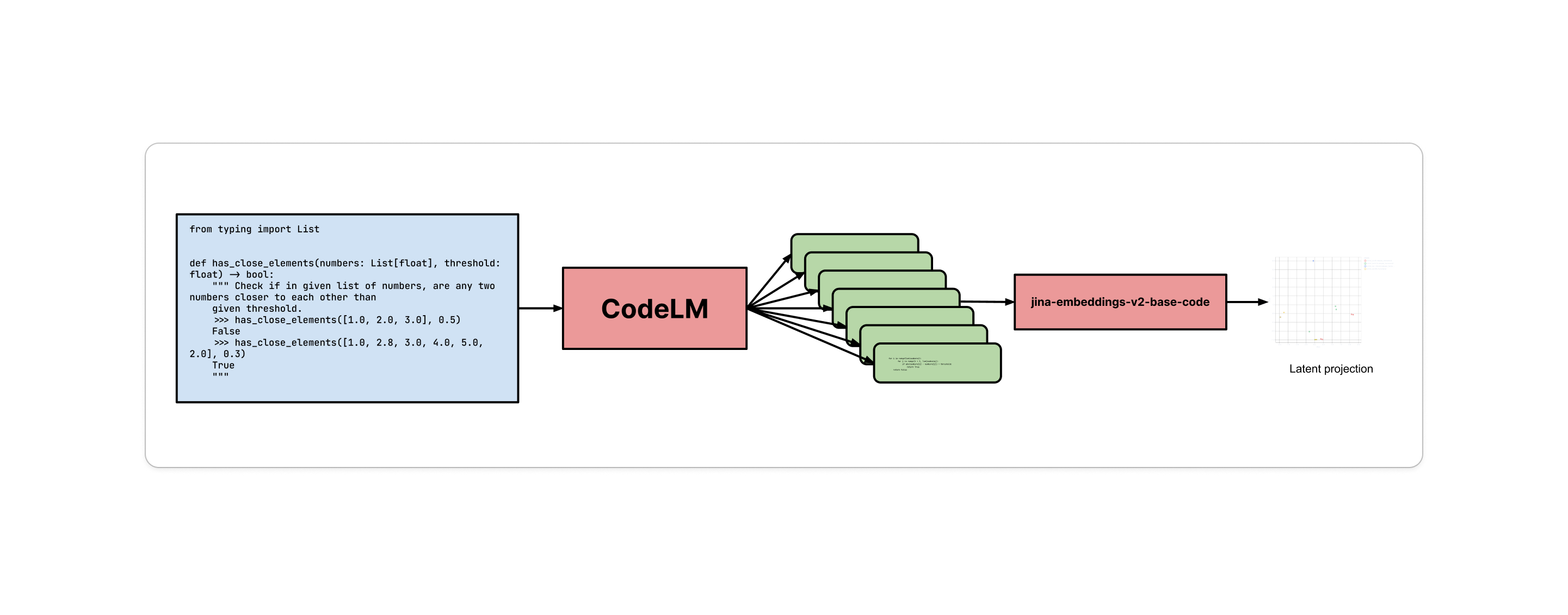

The coherent way to quantify diversity would be to look at the latent representations of different completions given a prompt. This can be done by using a good embedding model and doing some cluster analysis in the latent representation of different completion given. While this method has it’s shortcomings, it is a good starting point to quantify diversity. But the ideal end goal would be to measure how semantically diverse and functionally equivalent the generations are given a singular prompt.

Limitation of Code Embedding models to model semantics

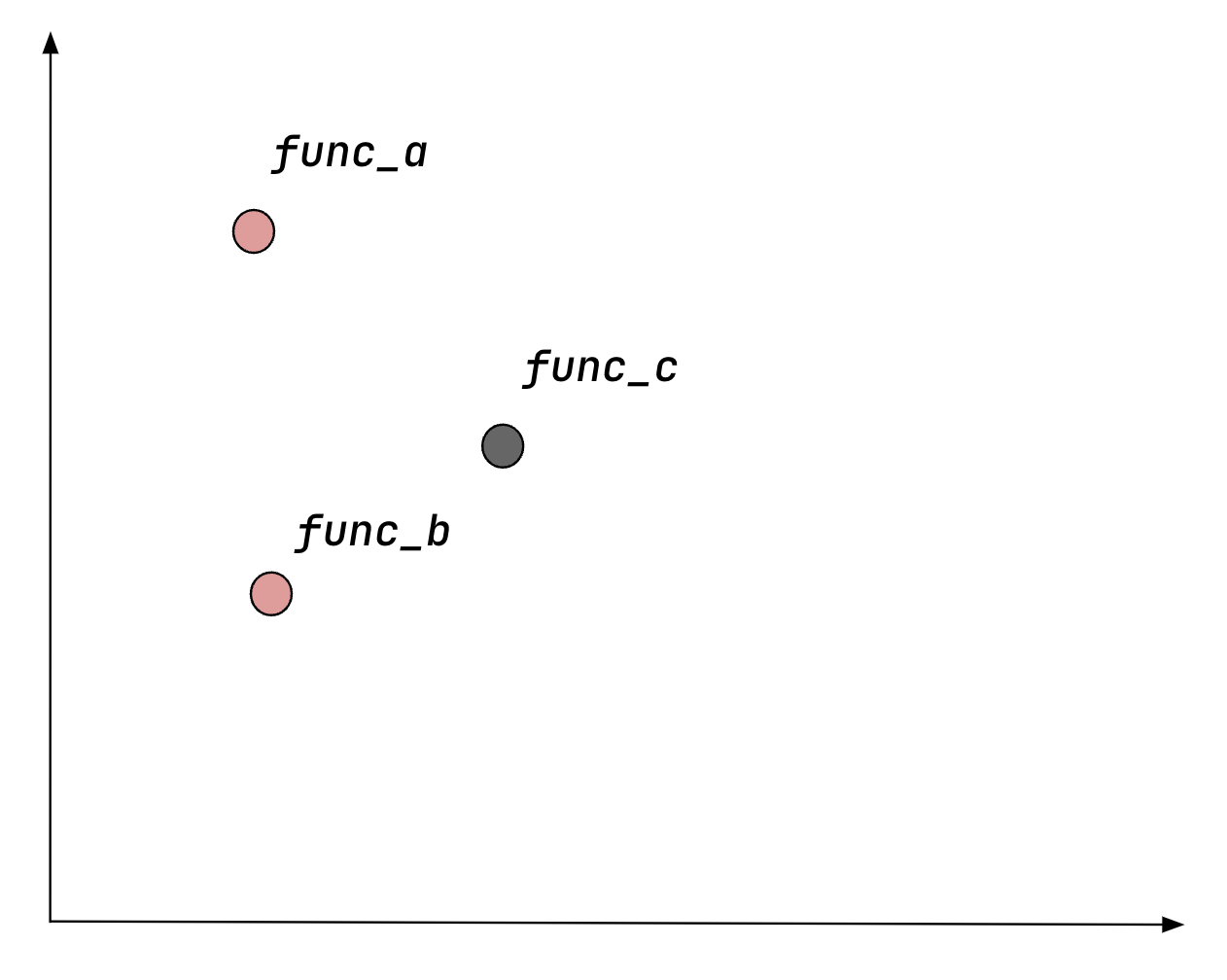

Unlike natural language wherein, the semantics of a sentence can be understood by looking at the words and their order, in the context of programming languages, the semantics of a code snippet is not just the syntactical elements and their order. The semantics of a code snippet is also dependent on the structure of the code, the states used, the functions called, the libraries imported, etc. This makes it difficult to model the semantics of a code snippet using just the lexical tokens and their order. And this makes the model heavily biased to syntax rather than actual semantic similarity. For instance, consider three functions,

def func_a(l):

return max(l)

def func_b(l):

max_element = l[0]

for i in l:

if i > max_element:

max_element = i

return max_element

def func_c(l):

max_element = l[0]

return max_element

func_a and func_b are semantically similar functionally trying to find the maximum element in a list. But func_c is actually bugged though naming conventions of the variable tends to be make the model map the latents to be similar. Though the actual semantically similar functional equivalent of func_a is func_b. The model tend to be severely biased towards the syntax rather than the actual semantics of the code.

Observations

For the sake of controlled experiment, we take the following models and do the analysis on the following models,

- deepseek-coder-1.3B

- deepseek-coder-6.7B

- deepseek-coder-33B

We use n_samples=10 and temperature=0.2to maintain consistency across scale.

The choice is with the assumption that they are trained on similar if not same amount of data and are base models with no high quality fine-tuning data encapsulated in the training run, since the dynamics of generation quality might differ post finetuning phase. We use average pairwise distance and squared sum error as the metrics to measure the closeness and tighness of the latent representations of the generations.

First grasp of the results tends to conclude that the generations of the smaller models are more diverse compared to that of larger scales, but reality is quite different.

Scenario-1 - Easy to solve problem

These are the problems that are mostly solved by all three model in pass@100/pass@10 cap. A careful exploration on the generation reveals how the 1.3B model specifically generate code which has different newlines count and white space count. An easy-to-solve example of the same is shown below. In the case the 1.3B model the code generated was not functionally correct in 6 out of 10 cases.

#1.3B--example-1

from typing import List

def has_close_elements(numbers: List[float],threshold: float) -> bool:

numbers.sort()

for i in range(1, len(numbers)):

if numbers[i] - numbers[i-1] < threshold:

return True

return False

#1.3B--example-2

def has_close_elements(numbers: List[float],threshold: float) -> bool:

numbers.sort()

for i in range(1 , len(numbers)) :

if numbers[i] - numbers[i-1] < threshold :

return True

return False

In case of the same example generated by 6.7B and 33B model, the model consistantly the exact same code snippet for easy problems.

from typing import List

def has_close_elements(numbers: List[float], threshold: float) -> bool:

for i in range(len(numbers)):

for j in range(i + 1, len(numbers)):

if abs(numbers[i] - numbers[j]) < threshold:

return True

return False

Exact AST match & Performance

| model name | AST exact match | pass@1 |

|---|---|---|

| Deepseek-coder-1.3B | 60.7% | 30.2% |

| Deepseek-coder-6.7B | 45.6% | 45.0% |

| Deepseek-coder-33B | 31.7% | 53.6% |

The above table was populated to measure exact AST matches of generations which are parseable valid code. The pass@1 metric is the percentage of generations that pass the test cases within the first generation. The results are quite interesting, the 1.3B model has the highest exact AST match amidst the generations. This is inline with the hypothesis that diversity is directly correlated with performance. More diverse the generations, more likely the generations contain the solution. Reiterating the diversity is defined as functionally equivalent, semantically similar generations.

You can explore through the solutions generated by these models here.

Conclusion and Future Directions

Proper measure of diversity defined as functionally equivalent, semantically similar can act as a secondary metric to count onto in addition to performance of a model in correctness of generating solution for the given problem. When a model generates high quality diverse generations, that increases the probability of the output search space containing the solution of solving a problem in a given turn. The current methods to measure diversity in the code are heavily biased towards the syntax rather than the actual semantics of the code which represents the actual useful diversity. The future directions would be to explore more on how to measure the actual diversity of the generations of Code LMs.

Cite

@inproceedings{reshint-code-lm-div,

title = "How diverse are the generations of CodeLMs ?",

author = "Reshinth Adithyan",

month = april,

year = "2024"}